Glossary

3G HD

At twice the bit rate as HD SDI, 3G can transmit 1080p (50 or 59.94) and 4:4:4 RGB signals on a single cable. 3G Level A is used for uncompressed 1080p 50/60 signals, used in broadcast and satellite. 3G Level B is used for uncompressed 1080i 4:4:4 and is used in post production.

AES/EBU

The digital audio standard defined as a joint effort of the Audio Engineering Society and the European Broadcast Union. AES/EBU or AES3 describes a serial bitstream which carries two audio channels, thus an AES stream is a stereo pair. The AES/EBU standard covers a wide range of sample rates and quantization (bit depth). In television systems, these will generally be 48 kHz and either 20 or 24 bits.

AFD

Active Format Description is a method to carry information regarding the aspect ratio of the video content. The specification of AFD was standardized by SMPTE in 2007 and is now beginning to appear in the marketplace. AFD can be included in both SD and HD SDI transport systems. There is no legacy analog implementation. (See WSS).

ASI

A commonly used transport method for MPEG-2 video streams, ASI or Asynchronous Serial Interface operates at the same 270 Mb/s data rate as SD SDI. This makes it easy to carry an ASI stream through existing digital television infrastructure. Known more formally as DVB-ASI, this transport mechanism can be used to carry multiple program channels.

Aspect Ratio

The ratio of the vertical and horizontal measurements of an image. 4:3 is the aspect ratio for standard definition video formats and television and 16:9 for high definition. Converting formats of unequal ratios is done by letterboxing (horizontal bars) or pillar boxing (vertical pillars) in order to keep the original format’s aspect ratio.

Bandwidth

Strictly speaking, this refers to the range of frequencies (i.e. the width of the band of frequency) used by a signal, or carried by a transmission channel. Generally, wider bandwidth will carry and reproduce a signal with greater fidelity and accuracy.

Beta

Sony Beta-SP videotape machines use an analog component format that is similar to SMPTE, but differs in the amplitude of the color difference signals. It may also carry setup on the luminance channel.

Bit

A binary digit, or bit, is the smallest amount of information that can be stored or transmitted digitally by electrical, optical, magnetic, or other means. A single bit can take on one of two states: On/Off, Low/High, Asserted/Deasserted, etc. It is represented numerically by the numerals 1 (one) and 0 (zero). A byte, containing 8 bits, can represent 256 different states. The binary number 11010111, for example, has the value of 215 in our base 10 numbering system. When a value is carried digitally, each additional bit of resolution will double the number of different states that can be represented. Systems that operate with a greater number of bits of resolution, or quantization, will be able to capture a signal with more detail or fidelity. Thus, a video digitizer with 12 bits of resolution will capture 4 times as much detail as one with 10 bits.

Blanking

The Horizontal and Vertical Blanking Intervals of a television signal refer to the time periods between lines and between fields. No picture information is transmitted during these times, which are required in CRT displays to allow the electron beam to be repositioned for the start of the next line or field. They also are used to carry synchronizing pulses which are used in transmission and recovery of the image. Although some of these needs are disappearing, the intervals themselves are retained for compatibility purposes. They have turned out to be very useful for the transmission of additional content, such as teletext and embedded audio.

CAV

Component Analog Video. This is a convenient shorthand form, but it is subject to confusion. It is sometimes used to mean ONLY color difference component formats (SMPTE or Beta), and other times to include RGB format. In any case, a CAV signal will always require 3 connectors— either Y/R-Y/B-Y, or R/G/B.

Component

In a component video system, the totality of the image is carried by three separate but related components. This method provides the best image fidelity with the fewest artifacts, but it requires three independent transmission paths (cables, for example). The commonly used component formats are Luminance and Color Difference (Y/Pr/Pb), and RGB. It was far too unwieldy in the early days of color television to ever consider component transmission.

Composite

Composite television dates back to the early days of color transmission. This scheme encodes the color difference information onto a color subcarrier. The instantaneous phase of the subcarrier is the color’s hue, and the amplitude is the color’s saturation or intensity. This subcarrier is then added onto the existing luminance video signal. This trick works because the subcarrier is set at a high enough frequency to leave room in the spectrum for the chrominance information. But it is not a seamless matter to pull the signal apart again at the destination in order to display it or process it. The resultant artifacts of dot crawl (also referred to as chroma crawl) are only the most obvious result. Composite television is the most commonly used format throughout the world, either as PAL or NTSC. It is also referred to as Encoded video.

Color Difference

Color Difference systems take advantage of the physiological details of human vision. We have more acuity in our black and white vision than we do in color. This means that we need only the luminance information to be carried at full bandwidth; we can scrimp on the color channels. In order to do this, RGB information is converted to carry all of the luminance (Y is the black and white of the scene) in a single channel. The other two channels are used to carry the “color difference.” Noted as B-Y and R-Y, these two signals describe how a particular pixel “differs” from being purely black and white. These channels typically have only half the bandwidth of the luminance.

Checkfield

A Checkfield signal is a special test signal that stresses particular aspects of serial digital transmission. The performance of the Phase Locked-Loops (PLLs) in an SDI receiver must be able to tolerate long runs of 0’s and 1’s. Under normal conditions, only very short runs of these are produced due to a scrambling algorithm that is used. The Checkfield, also referred to as the Pathological test signal, will “undo” the scrambling and cause extremely long runs to occur. This test signal is very useful for testing transmission paths.

Chroma

The color or Chroma content of a signal, consisting of the hue and saturation of the image. See Color Difference.

Decibel (dB)

The Decibel is a unit of measure used to express the ratio in the amplitude or power of two signals. A difference of 20 dB corresponds to a 10:1 ratio between two signals, 6 dB is approximately a 2:1 ratio. Decibels add while the ratios multiply, so 26 dB is a 20:1 ratio, and 14 dB is a 5:1 ratio. There are several special cases of the dB scale, where the reference is implied. Thus, dBm refers to power relative to 1 milliwatt, and dBu refers to voltage relative to .775 V RMS. The original unit of measure was the Bel (10 times bigger), named after Alexander Graham Bell.

dBFS

In Digital Audio systems, the largest numerical value which can be represented is referred to as Full Scale. No values or audio levels greater than FS can be reproduced because they would be clipped. The nominal operating point (roughly corresponding to 0 VU) must be set below FS in order to have headroom for audio peaks. This operating point is described relative to FS, so a digital reference level of -20 dBFS has 20 dB of headroom before hitting the FS clipping point.

DNR

Digital (or Dynamic) Noise Reduction. A technique to reduce the noise content of a signal by taking advantage of the repetitive nature of a television signal. Since noise is random, its average value over time is zero. By looking at sequential frames of a television signal, the noise can be averaged out.

DVI

Digital Visual Interface. DVI-I (integrated) provides both digital and analog connectivity. The larger group of pins on the connector are digital while the four pins on the right are analog.

EDH

Error Detection and Handling is a method to verify proper reception of an SDI or HD SDI signal at the destination. The originating device inserts a data packet in the vertical interval of the signal which contains a checksum of the entire video frame. This checksum is formed by adding up the numerical values of all of the samples in the frame, using a complex formula. At the destination this same formula is applied to the incoming video and the resulting value is compared to the one included in the transmission. If they match, then the content has all arrived with no errors. If they don’t, then an error has occurred.

Embedded Audio

Digital Audio can be carried along in the same bitstream as an SDI or HD SDI signal by taking advantage of the gaps in the transmission which correspond to the horizontal and vertical intervals of the television waveform. This technique can be very cost-effective in transmission and routing, but can also add complexity to signal handling issues because the audio content can no longer be treated independently of the video.

Eye Pattern

To analyze a digital bitstream, the signal can be displayed visually on an oscilloscope by triggering the horizontal timebase with a clock extracted from the stream. Since the bit positions in the stream form a very regular cadence, the resulting display will look like an eye—an oval with slightly pointed left and right ends. It is easy to see from this display if the eye is “open,” with a large central area that is free of negative or positive transitions, or “closed” where those transitions are encroaching toward the center. In the first case, the open eye indicates that recovery of data from the stream can be made reliably and with few errors. But in the closed case data will be difficult to extract and bit errors will occur. Generally it is jitter in the signal that is the enemy of the eye.

Frame Sync

A Frame Synchronizer is used to synchronize the timing of a video signal to coincide with a timing reference (usually a color black signal that is distributed throughout a facility). The synchronizer accomplishes this by writing the incoming video into a frame buffer memory under the timing direction of the sync information contained in that video. Simultaneously the memory is being read back by a timing system that is genlocked to a house reference. As a result, the timing or alignment of the video frame can be adjusted so that the scan of the upper left corner of the image is happening simultaneously on all sources. This is a requirement for both analog and digital systems in order to perform video effects or switch glitch-free in a router. Frame synchronization can only be performed within a single television line standard. A synchronizer will not convert an NTSC signal to a PAL signal; it takes a standards converter to do that.

Frequency Response

A measurement of the accuracy of a system to carry or reproduce a range of signal frequencies. Similar to Bandwidth.

H.264

The latest salvo in the compression wars is H.264 which is also known as MPEG-4 Part 10. MPEG-4 promises good results at just half the bit rate required by the currently dominant standard, MPEG-2.

High Definition (HD)

High Definition. This two letter acronym has certainly become very popular. Here we thought it was all about the pictures—and the radio industry stole it.

HDMI

The High Definition Multimedia Interface comes to us from the consumer marketplace where it is becoming the de facto standard for the digital interconnect of display devices to audio and video sources. It is an uncompressed, all-digital interface that transmits digital video and eight channels of digital audio. HDMI is a bit serial interface that carries the video content in digital component form over multiple twisted-pairs. HDMI is closely related to the DVI interface for desktop computers and their displays.

IEC

The International Electrotechnical Commission provides a wide range of worldwide standards. Among them, they have provided standardization of the AC power connection to products by means of an IEC line cord. The connection point uses three flat contact blades in a triangular arrangement, set in a rectangular connector. The IEC specification does not dictate line voltage or frequency. Therefore, the user must take care to verify that a device either has a universal input (capable of 90 to 230 volts, either 50 or 60 Hz), or that a line voltage switch, if present, is set correctly.

Interlace

Human vision can be fooled to see motion by presenting a series of images, each with a small change relative to the previous image. In order to eliminate the flicker, our eyes need to see more than 30 images per second. This is accomplished in television systems by dividing the lines that make up each video frame (which run at 25 or 30 frames per second) into two fields. All of the odd-numbered lines are transmitted in the first field, the even-numbered lines are in the second field. In this way the repetition rate is 50 or 60 Hz, without using more bandwidth. This trick has worked well for years, but it introduces other temporal artifacts. Motion pictures use a slightly different technique to raise the repetition rate from the original 24 frames that make up each second of film—they just project each one twice.

IRE

Video level is measured on the IRE scale, where 0 IRE is black, and 100 IRE is full white. The actual voltages to which these levels correspond can vary between formats.

ITU-R601

This is the principal specification for standard definition component digital video. It defines the luminance and color difference coding system that is also referred to as 4:2:2. The standard applies to both 625 line/50 Hz (PAL) and 525 line/60 Hz (NTSC) derived signals. They both will result in an image that contains 720 pixels horizontally, with 486 vertical pixels in 525, and 576 vertically in 625. Both systems use a sample clock rate of 27 Mhz, and are serialized at 270 Mb/s.

Jitter

Serial Digital signals (either video or audio) are subject to the effects of Jitter. This refers to the instantaneous error that can occur from one bit to the next in the exact position of each digital transition. Although the signal may be at the correct frequency on average, in the short term it varies. Some bits come slightly early, others come slightly late. The measurement of this jitter is given either as the amount of time uncertainty or as the fraction of a bit width. For 270 Mb/s video, the allowable jitter is 740 picoseconds, or 0.2 UI (Unit Interval—one bit width).

Luminance

The “black & white” content of the image. Human vision has more acuity in luminance, so television systems generally devote more bandwidth to the luminance content. In component systems, the luminance is referred to as Y.

MPEG

The Moving Picture Experts Group is an industry group that develops standards for the compression of moving pictures for television. Their work is an ongoing effort. The understanding of image processing and information theory is constantly expanding. And the raw bandwidth of both the hardware and software used for this work is ever increasing. Accordingly, the compression methods available today are far superior to the algorithms that originally made the real-time compression and decompression of television possible. Today, there are many variations of these techniques, and the term MPEG has to some extent become a broad generic label.

Metadata

The Greek word meta means “beyond” or “after.” When used as a prefix to “data,” it can be thought of as “data about the data.” In other words, the metadata in a data stream tells you about that data—but it is not the data itself. In the television industry, this word is sometimes used correctly when, for example, we label as metadata the timecode which accompanies a video signal. That timecode tells you something about the video, i.e. when it was shot, but the timecode in and of itself is of no interest. But in our industry’s usual slovenly way in matters linguistic, the term metadata has also come to be used to describe data that is associated with the primary video in a datastream. So embedded audio will (incorrectly) be called metadata when it tells us nothing at all about the pictures. Oh well.

NTSC

The color television encoding system used in North America was originally defined by the National Television Standards Committee. This American standard has also been adopted by Canada, Mexico, Japan, Korea, and Taiwan. This standard is referred to disparagingly as Never The Same Color or Never Twice the Same Color.

Oversampling

To perform digital sampling at a multiple of the required sample rate. This has the advantage of raising the Nyquist Rate (the maximum frequency which can be reproduced by a given sample rate) much higher than the desired passband. This allows the use of more easily realized antialias filters.

PAL

During the early days of color television in North America, European broadcasters developed a competing system called Phase Alternation by Line. This slightly more complex system is better able to withstand the differential gain and phase errors that appear in analog amplifiers and transmission systems. Engineers at the BBC claim that it stands for Perfection At Last.

Pathological Test Pattern

See Checkfield.

Progressive

An image-scanning technique which progresses through all of the lines of a frame in a single pass. Computer monitors all use progressive displays. This contrasts to the Interlace technique common to television systems.

Return Loss

An idealized input or output circuit will exactly match its desired impedance (generally 75 ohms) as a purely resistive element, with no reactive (capacitive or inductive) elements. In the real world we can only approach the ideal. So our real inputs and outputs will have some capacitance and inductance. This will create impedance-matching errors, especially at higher frequencies. The Return Loss of an input or output measures how much energy is returned (reflected back due to the impedance mismatch). For digital circuits, a return loss of 15 dB is typical. This means that the energy returned is 15 dB less than the original signal. In analog circuits, a 40 dB figure is expected.

RGB

RGB systems carry the totality of the picture information as independent Red, Green and Blue signals. Television is an additive color system, where all three components add to produce white. Because the luminance (or detail) information is carried partially in each of the RGB channels, all three must be carried at full bandwidth in order to faithfully reproduce an image.

ScH Phase

Used in composite systems, ScH Phase measures the relative phase between the leading edge of sync on line 1 of field 1 and a continuous subcarrier sinewave. Due to the arithmetic details of both PAL and NTSC, this relationship is not the same at the beginning of each frame. In PAL, the pattern repeats every 4 frames (8 fields) which is also known as the Bruch Blanking sequence. In NTSC, the repeat is every 2 frames (4 fields). This creates enormous headaches in editing systems and the system timing of analog composite facilities.

SDI

Serial Digital Interface. This term refers to inputs and outputs of devices that support serial digital component video. This could refer to standard definition at 270 Mb/s, HD SDI or High Definition Serial Digital video at 1.485 Gb/s, or to the newer 3G standard of High Definition video at 2.97 Gb/s.

SMPTE

The Society of Motion Picture and Television Engineers is a professional organization that has done tremendous work in setting standards for both the film and television industries. The term “SMPTE” is also shorthand for one particular component video format—luminance and color difference.

TBC

A Time Base Corrector is a system to reduce the Time Base Error in a signal to acceptable levels. It accomplishes this by using a FIFO (First In, First Out) memory. The incoming video is written into the memory using its own jittery timing. This operation is closely associated with the actual digitization of the analog signal because the varying position of the sync timing must be mimicked by the sampling function of the analog-to-digital converter. A second timing system, genlocked to a stable reference, is used to read the video back out of the memory. The memory acts as a dynamically adjusting delay to smooth out the imperfections in the original signal’s timing. Very often a TBC will also function as a Frame Synchronizer. See also: Frame Sync.

Time Base Error

Time Base Error is present when there is excessive jitter or uncertainty in the line-to-line output timing of a video signal. This is commonly associated with playback from videotape recorders, and is particularly severe with consumer type heterodyne systems like VHS. Time base error will render a signal unusable for broadcast or editing purposes.

Time Code

Timecode, a method to uniquely identify and label every frame in a video stream, has become one of the most recognized standards ever developed by SMPTE. It uses a 24-hour clock, consisting of hours, minutes, seconds, and television frames. Originally recorded on a spare audio track, this 2400-baud signal was a significant contributor to the development of videotape editing. We now refer to this as LTC or Longitudinal Time Code because it was carried along the edge of the tape. This allowed it to be recovered in rewind and fast forward when the picture itself could not. Timecode continues to be useful today and is carried in the vertical interval as VITC, and as a digital packet as DVITC. Timecode is the true metadata.

Tri-Level Sync

For many, many years, television systems used composite black as a genlock reference source. This was a natural evolution from analog systems to digital implementations. With the advent of High Definition television, with even higher data rates and tighter jitter requirements, problems with this legacy genlock signal surfaced. Further, a reference signal with a 50 or 60 Hz frame rate was useless with 24 Hz HD systems running at film rates. Today we can think of composite black as a bi-level sync signal—it has two levels, one at sync tip and one at blanking. For HD systems, Tri-Level Sync has the same blanking level (at ground) of bi-level sync, but the sync pulse now has both a negative and a positive element. This keeps the signal symmetrically balanced so that its DC content is zero. And it also means that the timing pickoff point is now at the point where the signal crosses blanking and is no longer subject to variation with amplitude. This makes Tri-Level Sync a much more robust signal and one which can be delivered with less jitter.

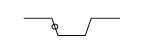

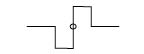

Tri-Level sync offers improved timing accuracy over traditional bi-level sync.

The analog output of a standard sync generator is bi-level sync. The timing reference is the 50% point of the leading edge.

The relative timing of this point shifts with changes in gain, DC reference, and frequency response.

The timing reference in tri-level sync is also at the 50% point of the sync pulse, but because this pulse has both the positive and negative excursions, this point is the same as the DC ground reference. This symmetry makes the signal virtually immune to time shift from gain, DC and response errors. The zero point never drifts – the zero crossing is easy to detect every time which ensures timing accuracy.

USB

The Universal Serial Bus, developed in the computer industry to replace the previously ubiquitous RS-232 serial interface, now appears in many different forms and with many different uses. It actually forms a small local area network, allowing multiple devices to coexist on a single bus where they can be individually addressed and accessed.

VGA

Video Graphics Array. Traditional 15-pin, analog interface between a PC and monitor.

WSS

Wide Screen Signaling is used in the PAL/625 video standards, both in analog and digital form, to convey information about the aspect ratio and format of the transmitted signal. Carried in the vertical interval, much like closed captioning, it can be used to signal a television receiver to adjust its vertical or horizontal sizing to reflect incoming material. Although an NTSC specification for WSS exists, it never achieved any traction in the marketplace.

Word Clock

Use of Word Clock to genlock digital audio devices developed in the audio recording industry. Early digital audio products were interconnected with a massive parallel connector carrying a twisted pair for every bit in the digital audio word. A clock signal, which is a square wave at the audio sampling frequency, is carried on a 75 ohm coaxial cable. Early systems would daisychain this 44.1 or 48 kilohertz clock from one device to another with coax cable and Tee connectors. On the rising edge of this Work Clock these twisted pairs would carry the left channel, while on the falling edge, they would carry the right channel. In most television systems using digital audio, the audio sample clock frequency (and hence the “genlock” between the audio and video worlds) is derived from the video genlock signal. But products that are purely audio, with no video reference capability, may still require Word Clock.

YUV

Strictly speaking, YUV does not apply to component video. The letters refer to the Luminance (Y), and the U and V encoding axes used in the PAL composite system. Since the U axis is very close to the B-Y axis, and the V axis is very close to the R-Y axis, YUV is often used as a casual shorthand for the more long-winded (and correct) “Y/R-Y/B-Y.”

Y/Cr/Cb

In digital component video, the luminance component is Y, and the two color difference signals are Cr (R-Y) and Cb (B-Y).

Y/Pr/Pb

In analog component video, the image is carried in three components. The luminance is Y, the R-Y color difference signal is Pr, and the B-Y color difference signal is Pb.